什么是MCP-Client?

MCP-Client是Model Context Protocol(模型上下文协议)架构中的一个重要组件,用于连接AI模型(如Claude、GPT等大型语言模型)与外部数据源、工具和服务的桥梁。

MCP(Model Context Protocol)是由Anthropic公司在2024年底首次提出并开源的一种开放标准协议,旨在解决大语言模型(LLM)与外部世界的连接问题。这一协议的核心价值在于打破了AI模型的”信息孤岛”限制,使模型能够以标准化的方式访问和处理实时数据,显著扩展了大模型的应用场景。

在MCP架构中,有三个关键组件:

- MCP服务器(

Server):轻量级服务程序,负责对接具体数据源或工具(如数据库、API等),并按照MCP规范提供标准化的功能接口。每个MCP服务器封装了特定的能力,如文件检索、数据库查询等。 - MCP客户端(

Client):嵌入在AI应用中的连接器,与MCP服务器建立一对一连接,充当模型与服务器之间的桥梁。它负责发现可用服务、发送调用请求、获取结果,并将这些信息传递给AI模型。 - 宿主应用(

Host):运行LLM的应用程序或环境,如Claude桌面应用、Cursor IDE等。宿主通过集成MCP客户端,使其内部的模型能够调用外部MCP服务器的能力。

MCP-Client的工作原理是基于JSON-RPC 2.0协议,通过标准化的接口与MCP服务器进行通信。它能够自动发现可用的MCP服务器及其提供的工具,并将这些信息以结构化的方式提供给大语言模型,使模型能够理解可用的工具及其功能,从而根据用户需求决定何时何地调用这些工具。

为什么要自写MCP-Client?

自主开发MCP-Client有几个重要的原因和优势:

- 定制化需求:市场上的通用

MCP客户端(例如:ClaudeDesktop、Cursor、Cline等等)可能无法满足特定业务场景的需求。通过自主开发,可以根据企业或个人的具体需求进行定制,比如添加特定的安全验证、数据过滤、或针对特定领域的优化。 - 系统集成:将

MCP-Client与现有系统无缝集成。自主开发的MCP-Client可以更好地适配已有的技术栈和架构,减少兼容性问题,提高开发效率。 - 数据隐私与安全:对于敏感数据或内部系统,自主开发的

MCP-Client可以实现更严格的权限控制和数据保护措施,确保敏感信息不会被未授权访问或泄露。 - 性能优化:针对特定用例优化性能。例如,对于需要高频率、低延迟访问的场景,可以通过定制

MCP-Client来减少通信开销,提高响应速度。 - 扩展功能:实现标准

MCP协议之外的增强功能。比如添加高级缓存机制、请求队列管理、负载均衡,或针对特定AI模型优化的上下文处理逻辑。 - 控制和可维护性:对于依赖

AI能力的核心业务,自主开发的MCP-Client意味着更好的控制能力和可维护性。当需求变化或出现问题时,可以快速进行调整和修复,而不必依赖第三方供应商。 - 适配多种AI模型:自主开发的

MCP-Client可以设计为同时支持多种不同的大语言模型(如Claude、GPT等),根据任务需求动态选择最适合的模型,提高系统灵活性。 - 特殊协议支持:对于需要使用特殊通信协议或数据格式的场景,自主开发可以实现这些非标准需求。

- 降低依赖风险:减少对第三方服务的依赖,增强系统的独立性和韧性。如果第三方服务发生变更或中断,自主开发的系统可以更快地适应和调整。

- 专业知识沉淀:通过自主开发

MCP-Client,团队可以积累AI与外部系统集成的专业知识和经验,这对于长期的AI战略和能力建设非常有价值。

实际上,随着AI应用的深入和普及,越来越多的组织开始认识到,AI基础设施(包括MCP-Client)是一种战略性资产。自主开发这些组件不仅可以获得更好的技术匹配度,还能在竞争中获得差异化优势,尤其是在AI技术对业务至关重要的领域。

MCP协议的开放性恰恰为自主开发MCP-Client提供了可能,使得组织和开发者能够在标准化框架下创建适合自己需求的定制解决方案,同时仍然保持与整个生态系统的互操作性。通过自写MCP-Client,开发者可以充分利用AI大模型的能力,同时保持对系统架构和数据流的完全控制。

MCP-Client编写

工程搭建

在本节实验中,需要大家自己准备一个适配openai协议的大模型API,例如:deepseek V3,Qwen系列,Moonshot月之暗面等等。

为了编写MCP Client,在这里我们直接使用上一节(从0开始实现MCP-Server)中,创建好的工程。

首先创建环境变量.env文件,在该文件中我们放入自己的大模型相关信息:

主要包含3个字段:

OPENAI_API_KEY:大模型的API KEYBASE_URL:大模型请求地址MODEL:模型名称

这里对模型的种类不限,我使用的是

moonshot,大家使用其他大模型均可。

MCP-Prompt

目前支持或深度集成MCP协议的大模型,主要包括:Claude系列和GPT系列等。

国内的大模型供应商对于MCP协议基本上没有做针对性的集成训练,所以在国内使用MCP协议,必须编写结构化的MCP-Prompt,通过system prompt的方式让国内的大模型具备适配MCP协议。

为了编写这个提示词,我使用cloudflare对大模型进行代理,然后对Cursor的MCP请求进行截获并将MCP提示词相关内容保留,其他无关内容删除,得到以下提示词,当然大家也可以自行对这个提示词进行修改,实现自己的定制化。

在工程中创建文件MCP_Prompt.txt,将以下内容放入文件中。

1 | You are an AI assistant, you can help users solve problems, including but not limited to programming, editing files, browsing websites, etc. |

在这个提示词中,我设置了一个特殊的标记符

<$MCP_INFO$>,该标记符用于后期载入MCP Server及MCP Tool相关工具的描述信息。

Stdio通信协议

stdio 传输方式是最简单的通信方式,通常在本地工具之间进行消息传递时使用。它利用标准输入输出(stdin/stdout)作为数据传输通道,适用于本地进程间的交互。

在这里我们首先以上一节(从0开始实现MCP-Server)中构建的weather MCP Server为例,编写MCP Client向MCP Server进行请求。

编写mcp_client_stdio.py代码如下所示:

1 | import asyncio |

注意:这里调用的是上一节中编写的

weather MCP Server,所以weather.py文件应该和mcp_client_stdio.py文件在同一目录下。

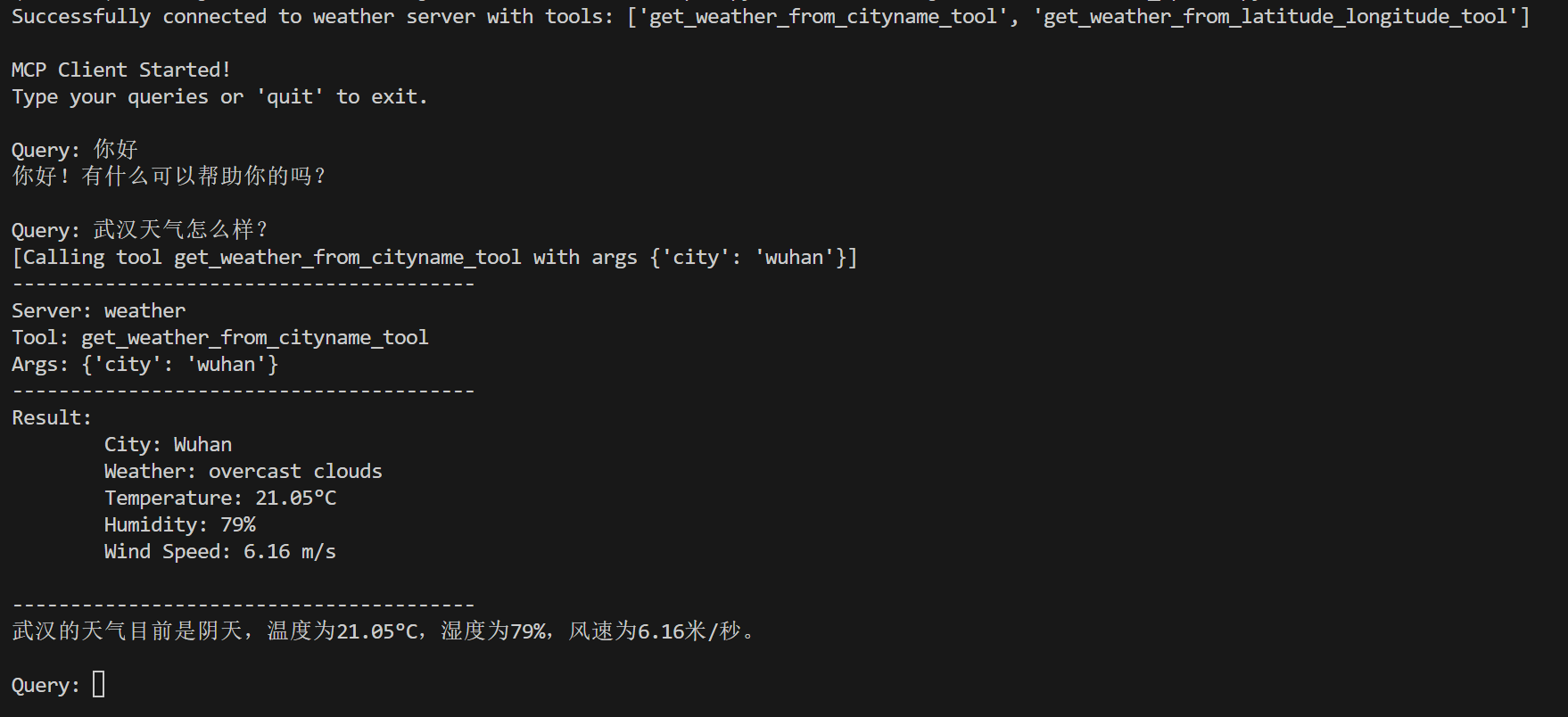

代码执行效果演示:

MCP Server调用成功!

SSE通信协议

SSE 是基于 HTTP 协议的流式传输机制,它允许服务器通过 HTTP 单向推送事件到客户端。SSE 适用于客户端需要接收服务器推送的场景,通常用于实时数据更新。

在这里同样可以以上一节中,我们在公网中搭建的weather MCP SSE Server。

编写代码mcp_client_sse.py如下所示:

1 | import asyncio |

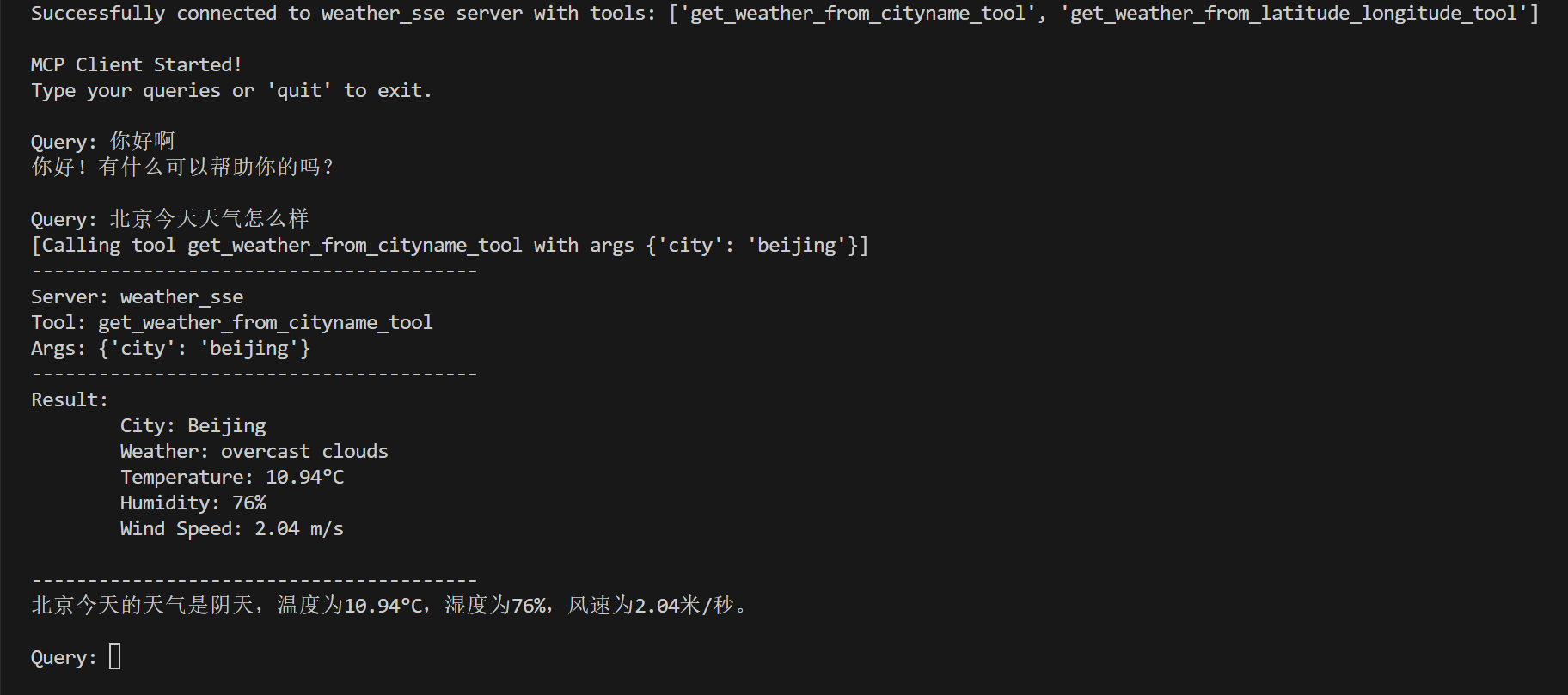

执行效果演示:

同样可以成功调用MCP SSE Server。

大家可以直接调用我部署好的

MCP SSE Server,为了方便大家学习,这里可以直接使用的部署好的服务

http://47.113.225.16:8000/sse来进行测试。当然也鼓励大家换成其他的服务尝试。

采用配置文件进行加载

经过前面的实验,我们现在已经可以通过自己编写的MCP Client连接任意MCP Server,包括stdio和sse通信协议,但是都只连接了一个MCP Server。用过Cursor或其他MCP Client应用的同学应该很清楚,他们是通过一个JSON配置文件去加载多个MCP Server,那么我们自己编写的MCP Client能否达到这个效果呢?

当然可以,接下来的实验我们就一起来编写相关代码,实现这个需求。

首先,我们定义自己的JSON文件协议,例如:

1 | { |

注意:

{高德API KEY}大家可以去高德官网注册账号,可以免费获取。

字段意义如下所示:

- 公共字段:

isActive:用于控制该MCP Server是否被激活。type:MCP Server的类型,取值为stdio或ssename:MCP Server别名。

stdio相关字段:command:命令名称。args:参数列表env:环境变量字典

sse相关参数:url:SSE MCP Server服务地址。

编写mcp_client_mix.py文件,内容如下所示:

1 | import asyncio |

演示效果如下所示:

可以看到,两个工具都可以成功调用。

值得进一步优化的小建议

在本节中,我们已经实现了通过MCP配置文件,加载所有的MCP Server,并且经过验证所有的MCP Server工具都可以成功调用。

但是,现在的版本无法完成工具之前进行相互调用,无法通过用户的需求调用多个工具配置完成用户问题的解答,基于此大家可以自行修改现有代码,实现的方式不难,大家可以自己动手实操一下。